The overall quality of app design has seen major advances in recent years. Touch User Interface (TUI) is very quickly becoming a dominant form of human computer interaction.

Touché: Enhancing Touch Interaction on Humans, Screens, Liquids, and Everyday Objects

Keep in mind that touch is still in a technological infancy at best. For example, there are many problems with touch input not yet resolved. Discoverability of interactions, hard-to-learn, tricky-to-remember gestures, lack of hover (user intent), using a touch-screen while travelling for example is not always optimal. Researchers at Disney and Carnegie Mellon have developed Touche, an interface system where multiple gestures can be added to other objects, including “smart doorknobs”.

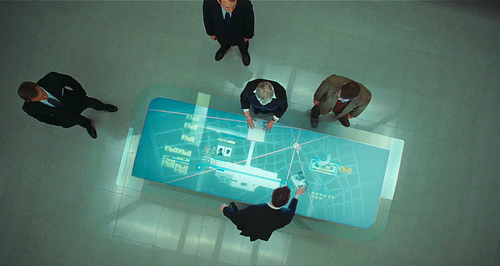

With the significant know-how gained from development of the Kinect technology for XBox, Microsoft have a considerable advantage developing the next generation of the computing devices and form factors - a generation that may not require touch at all - of course it will be available - but future devices instead will offer users contextual input via a plethora of available interaction types - keyboard, touch, voice, gesture, text input and so on.

Touch is quickly becoming the dominant form of user input but it’s not the only way we will interact with our devices. Beyond touch will come gesture and voice, but like all things in the future the reality will not be all of one or all of another but a hybrid mixed ecosystem of solutions presented contextually. For example, think of typing away in an IM application, you want to make some food so you walk away from your computer, the Skype application reacts to the change in context and switches to voice input and read-aloud playback of any new messages in the open thread while you are away from your computer - until you return and it switches back to normal typing input mode.

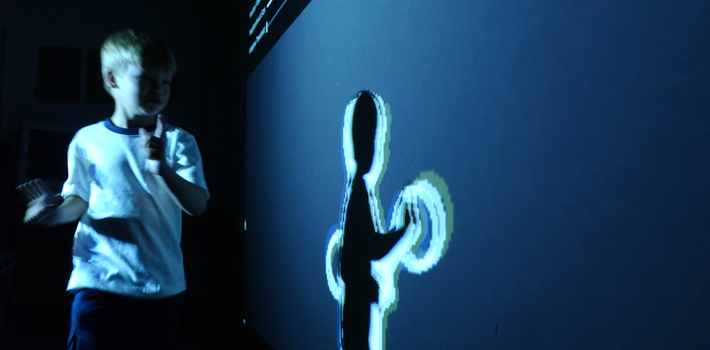

Think kinect gaming system meets the camera on your smartphone where you could wave at the phone camera rather than brushing your finger across a glass screen - if you had your hands full this would be really useful.

In computer interfaces, two types of gestures are distinguished. We consider online gestures, which can also be regarded as direct manipulations like scaling and rotating. In contrast, offline gestures are usually processed after the interaction is finished; e. g. a circle is drawn to activate a context menu. Read the rest of the article on this Wikipedia page

Offline gestures: Those gestures that are processed after the user interaction with the object. An example is the gesture to activate a menu.

Online gestures: Direct manipulation gestures. They are used to scale or rotate a tangible object. How would you approach a touch interface that supports direct-manipulation of the UI?

RFID chips allow app users to pay and perform other transactions without taking their phone from their pocket.

So what of the designers tasked with creating such complex UI? How will this work in reality? Larger teams are one possibility, just look at the demands placed on the games industry, team sizes have ballooned over the last decade, this is as a reaction to the increasing power of games consoles and constant march towards undetectable photo-realistic graphics - to help designers cope with the demands that will be placed on them a new class of design tool is desired, capable of handling lots of dynamic screen activity - dense gesture regions containing rich interactions presented in ever-increasingly intuitive UI packages.

Designs are passing through more and more hands. Collaboration is essential and via the internet, distributed teams are working with each other more than ever.